If you have a professional requirement for extreme audio time-stretching, especially in the natural history domain, please use the contact details above

WORLD LEADER IN EXTREME TIME-STRETCHING OF AUDIO

TRANSIENT DETAIL INSPECTION OF MULTI-SECOND EVENTS

EXTREME EXPANSION OF MICRO-SOUND / MILLISECOND EVENTS (~0.001 SEC)

INCLUDING SPEED RAMPING, SPATIAL PRESENCE, RE-PITCHING AND PITCH LAYERING

TAILORED TO NATURAL HISTORY RECORDINGS, PHANTOM CAMERA SLO-MO VIDEO SYNCHRONISATION & EXPERIMENTAL AUDIO RESEARCH

Input / source format : 48kHz – 384kHz, 16bit – 32bit, 1 or 2 channel

Output / delivery format : 48kHz – 96kHz, 24bit or 32bit, 1, 2 or 4 channel

OVERVIEW

On-the-fly processing during playback enabling perceptual tuning by ear of parameter adjustments.

Multiple simultaneous approaches to the audio source employed to – blend tonal breadth with micro-textures, retune extreme low and high frequencies toward of the human audio spectrum, maximise three-dimensional involvement via pointillist spraying within stereo space and overlapping dual frequency stereo movement.

Required for audio inspection and synchronisation to slow-motion video, interface tailored to the precise control of parameters over an unlimited range.

The software is constantly in development and responsive to new contexts. All parameters have an unrestricted range with numerical or re-scalable virtual/physical controls. New parameters and methods of control can be incorporated based on output purposes, for instance, variable slow-down rates over a duration – smoothly transitioning from normal speed to 80x slower, freeze-frame audio, or multichannel diffusion etc.

Time stretch factor for synchronisation to ‘slo-mo’ video footage : the capture rate of high-speed video cameras used by natural-history film units are often clocking 2000fps. When slowed to the UK broadcast delivery rate of 25fps this requires audio duration to be stretched by a factor of 80x.

The technique developed here can be used far beyond this for ultra high-speed Phantom video cameras clocking in the region of 12,500fps, for slo-down factors in the realm of 500x, 1000x or 2000x slower and even beyond if required, while retaining temporal detail and frequency accuracy.

An application of this process was explored for the BBC’s GREEN PLANET series, extreme slo-mo’ video footage of a transient event – a Himalayan Balsam seed-pod exploding. The original audio file was provided by the sound recordist Chris Watson, with the 80x slow-motion video footage by the Green Planet producer Rupert Barrington. This is a world first application for this technique – hearing the actual recorded sound of the subject, time-stretched to the extreme – matched to the rate of the associated 2000fps Phantom camera footage. For reference to those who wish to hear this time-stretch result I’m showcasing it at the end of this article.

I am continuing to work with television companies while pushing the capabilities of the process even further.

GORSE SEED-POD POPPING

A gorse seed ‘pod pop’ is an extremely brief transient event, 1/100th second or 10ms, it is heard as nothing more than a tick.

Input : mono, 96kHz; recording by Chris Watson, Redgrave & Lopham Fen.

Output : stereo, 96kHz; series four extreme slow-down rates, each tuned to different sonic aspects of the exploding pod :

200 x slower

1000 x slower

2000 x slower

5000 x slower (1 second would become 83 minutes! 1/100th second becomes just over a minute, as here…)

BACKGROUND CHALLENGE

There’s two main, mutually related problems to overcome when slowing the playback of audio recordings – and unique to it ( – which have no analogues in slowing video footage).

The information or ‘samples’ within an audio file are finite and organised in relation to a single dimension of time (on a timeline corresponding exactly to the normal perception time). If we stretch this dimension to slow audio down, we also stretch the distance between the sample values. Video, as I shall explain, exists in two independent dimensions of time, so enabling slow-down of one without affecting the other.

When slowing audio :

1. Pitch drops dramatically.

2. Textural detail quickly becomes blurred.

1. For high-frequency audio sources we can usefully accept a drop in pitch if the stretch factor is not more than 8x (a fall of 3 octaves). For ‘normal’ frequency sources a stretch of about 4x (a fall of 2 octaves) is acceptable – though not ideal. If original pitch is to maintained there is already a challenge even before we approach the desired extreme stretch factors of 80x. For an 80x factor that’s an immense drop of nearly 9 octaves. Translated to such a low pitch many sounds become inaudible, more-so when 160x, 500x, 1000x or 5000x !! slow-down factors are applied.

2. When stretching audio duration the increased distance between samples must be filled by repeating those samples (the undesirable robotic ‘stair casing’ produced can be smoothed with anti-aliasing & filtering and disturbed with low amplitude random noise but these commonly used techniques are just applying different terms of smudging to reduce artefacts rather than retaining authentic textural detail).

With the 80x slow-down example this results in an 80-fold reduction in perceptual detail, or said otherwise audio becomes 80 times blurred. This not only blurs perceptual detail but also looses the ‘signature’ of the sound. Within small segments of sound various event occur, and how they relate to each other forms a signature as to what is creating the sound – like a language. If these relations between micro-events are stretched too apart the association between them breaks apart and the signature is lost. Everything ends-up sounding the same – arriving form some nondescript source.

If recording audio using an ultra-high sample rate, 192kHz as opposed to 48kHz (four times the broadcast sample rate), this helps, but still results in 20x less perceptual detail and signature loss (more significantly it does not help the 80x pitch drop).

INDUSTRY (WORKAROUND) SOLUTION

For super slow-motion applications (40x – 500x slower etc) sound field recordings are not able to used alongside their associated video footage – due to the immense pitch and detail drop as described above.

Instead, sound effects are manually created in a studio environment during post-production to accompany the slowed footage, imaginatively simulating what the event might have sounded like. Often using sound-making materials only marginally related to the actual subject, these Foley sound effects help engage the viewer in the visual event (albeit often in an almost cartoonish or comedic way). While evidently they cannot provide any authentic information about the sound related to the footage, at least they are closely tailored to it, and so preferable to the other oft applied solution, washes of composed music.

It may come as a surprise that the majority of sounds in natural history documentaries are manufactured artificially in a studio. That they may not strike the viewer as ‘made-up’ sounds is not that they are so realistic, but rather the viewer is distracted from critical listening due fast editing cuts, drowning music, etc. More seriously, the ubiquity of Foley sound has tempered our overall listening ability to ignore, or not tune-in to, the actual natural world. Sounds from the natural world are fast becoming the poor relative of the cinematically generated experience – to which the ear is now more stimulated by.

Between Foley effects and composed music, in many ways the time is ripe for a mind-shift in the focus of sound production in natural history documentaries – that sound reality or sound actuality (sound recorded at and around the time of filming) is an equally important information gathering and projection medium as video. Indeed due to sound’s spatially immersive character, it is paradoxically closer to the visual world as we behold it (and long has been), than video on screens. This project is part of this understanding.

DEVELOPED TECHNIQUE

There are two main theories that can be developed for sound time-stretching – while maintaining original pitch :

– spectral processing (identifying and maintaining average frequency via FFT analysis within v.brief sequential chunks of audio),

– granular processing (looping v.brief sequential chunks of audio at a speed independent to the overall playback speed).

Having developed both, I focused on granular processing to further push as the optimum method for audio ‘slow-mo’, concentrating on tonal complexity and detail inspection.

It is worth taking an extreme example to understand how granular theory works, so not rates of slow-down but completely stopping the recorded material. Also not to miss the obvious, for a moment talking in basic terms, if we stop the playback of video footage, we see? – a ‘freeze frame’, if we stop an audio recording, we hear? – nothing. Why is this?

If it were possible in the audio example, extreme case, to hear a ‘freeze frame’ of audio, we could then probably solve the less extreme cases of slow-down rates. An indeed with this process we have an analogue of the video freeze frame, we can hear audio even when playback is stopped! (which is akin to infinity slow).

What sorcery is this?

There are two relevant dimensions of time to the visual side of video capture. Ok so moving pictures are consecutive still pictures, or ‘frames’. Each frame exists in the dimension of time I’ll call the delivery rate of light (and our perception of it). Then there’s another time dimension, the rate of replacing or refreshing one picture or frame for another (the pseudo moving part). If this time dimension is stopped, the initial time dimension – the delivery rate of light and perception of it – continues – and so we see a still frame (evidently if we also removed this remaining time dimension, there would be blackness).

With audio there is only one dimension of time – the delivery rate of sound (and our perception of it). If we remove this one and only dimension of time, sound ceases to exist in time – and so silence.

The application of either spectral or granular theory overcomes this limitation of recorded sound by adding another dimension of time. In this way it mimics the video example, where there are discrete pictures or ‘still frames’ existing in a dimension of time separate to the time with which those pictures are refreshed.

HISTORY

Granular theory was originally conceived in terms of relatively large grain sizes, measured in seconds. These could be repeated from magnetic recording tape (see Iannis Xenakis). Then thanks to the medium of digital recording and computer processing (see Curtis Roads) the theory was applied to the microsecond and millisecond realm.

Most applications of granular theory now focus on incorporation into electronic musical instruments – granular synthesisers and as such offer fairly coarse ‘tactile’ controls of parameters (and restricted sample rates) tailored to musical outcomes. They are also often generous in ignoring or covering (with reverb) accuracy issues. For critical 96kHz and 192kHz audio inspection, an accuracy of parameter control, breadth of approaches and ability to flex and develop, responding to end-delivery and documentary context, require custom tools to be built, such as the one here.

VISUAL FRAME = AUDIO GRAIN

Whatever constitutes a frame in video, can this be applied to create a frame of audio? In short, yes, that is what we term a grain.

Plainly, a video still-frame contains a group of points in two-dimensional space, think of them as the halftone dots in a newspaper image perceived en-masse. Recorded audio has a ‘sample rate’ – single points of data perceived one at a time – within one dimension, but not a ‘frame rate’. The granular method collects a group of these single points together, to create a ‘frame’ of audio.

For some reason I’m thinking back to an espionage situation, where within a book a page of military drawings are being photographed to reduce them on a film, to the size of full-stop. That then cut-out from the developed negative and glued into a book. The top half of a semi-colon scraped away and replaced with the full-stop sized grain of information.

The ‘framing’ method to create a group of samples is called windowing. The window, as it is, contains no data or audio, it’s just a shape in time, an empty net. When a window is applied to the original audio, it becomes populated with audio data – it is then said to be a grain.

A grain of audio is a valuable thing, something like a molecule’s relationship to the atoms it contains. Like a chemist, once we net a grain we are then in position to remove it’s time dimension from the time dimension associated with playback of it’s overall file. In essence we ‘grow’ the audio file to a larger size while maintaining the integrity and potency of it’s chemical properties – rather than diluting them.

Grains are considered to be micro-sounds, so brief that every grain whatever it’s origin, is heard as the same a ‘tick’, with no other identifiable sound. Just to take a tape-measure out of the drawer, I’m working with grains of a length from 1/100 of a second or 10ms (for longer unraveling events), to 1/1000 of a second or 1ms (for v.brief transient events such as the gorse seed pod pop). Yet within their ‘micro-dot’ size, they contain the complete textural audio character of the fractional moment they frame. For specialist work on ultra micro-sound events, which have a duration of only 1ms-2ms, I can focus the grain size down even tighter to 1/10,000 of a second or 0.1ms. No other work in this territory that I know of is developing techniques for magnifying such short events, it is truly groundbreaking, the electron microscope of the sound world.

The purpose of creating grains of sound is that each can be played back at the original recording rate, so maintaining pitch and the original textual detail – yet while playing back the larger audio file at another rate, extending it’s duration – the slow-down factor.

If we stretch a recording to twice it’s original recorded duration (so playback is 2x slower) each grain is also stretched to twice it’s length. To maintain original pitch and detail we just double the grain’s playback rate. This is how the two dimensions of time work independently to each other. Though of course not only for nominal factors, such a 2x or 4x, but also for say 1000x stretches. If the overall ‘slow-motion’ speed of the file is 1000x slower, we then play each stretched grain back 1000x faster than it’s originally recorded rate – the result is that original pitch and detail is maintained.

In essence by employing two playback rates (or two time dimensions) we separate pitch control from playback speed. With each independent of the other, we can not only maintain pitch alongside extreme time-stretching but also freely modify pitch while not touching time-stretch duration.

Why modify pitch?

High speed transient events, are by definition, high frequency events, they occupy nearly no time at all. Within these momentary slithers, low frequencies cannot exist – they are too long. So therefore fleeting transient events are composed mostly of wavelengths that can generated by the event, high frequencies, very high pitch sounds.

In terms of where the human ear, and brain’s expertise is centred, sonically, it seeks textural audio information within the voice frequencies, these are in fact quite low 100-300Hz for their fundamental frequency, though higher for consonant attack. While very high frequency sound may indicate movement it is not as intensively processed and inspected as the range that language occupies.

With granular we can drop the playback speed of fleeting transient events down to 1000x slower, yet usefully control their pitch, maybe dropping it by 2x or 4x, so more toward the focus of our listening faculty. In fact it’s often useful to move very high frequencies to a couple of different new positions simultaneously. This multifarious technique to maximise tonal information was employed for a BBC’s GREEN PLANET experimental commission, as was a technique to provide two simultaneous distances of detail, presenting broader more impactful larger sonic forms superimposed upon closer finer textures.

BBC World Service radio programme about Chris Watson’s involvement with the GREEN PLANET, including an introduction to my ‘Granular Machine’ at around 20mins in…

IN THE STUDIO WITH CHRIS WATSON

GRAIN ENGINES

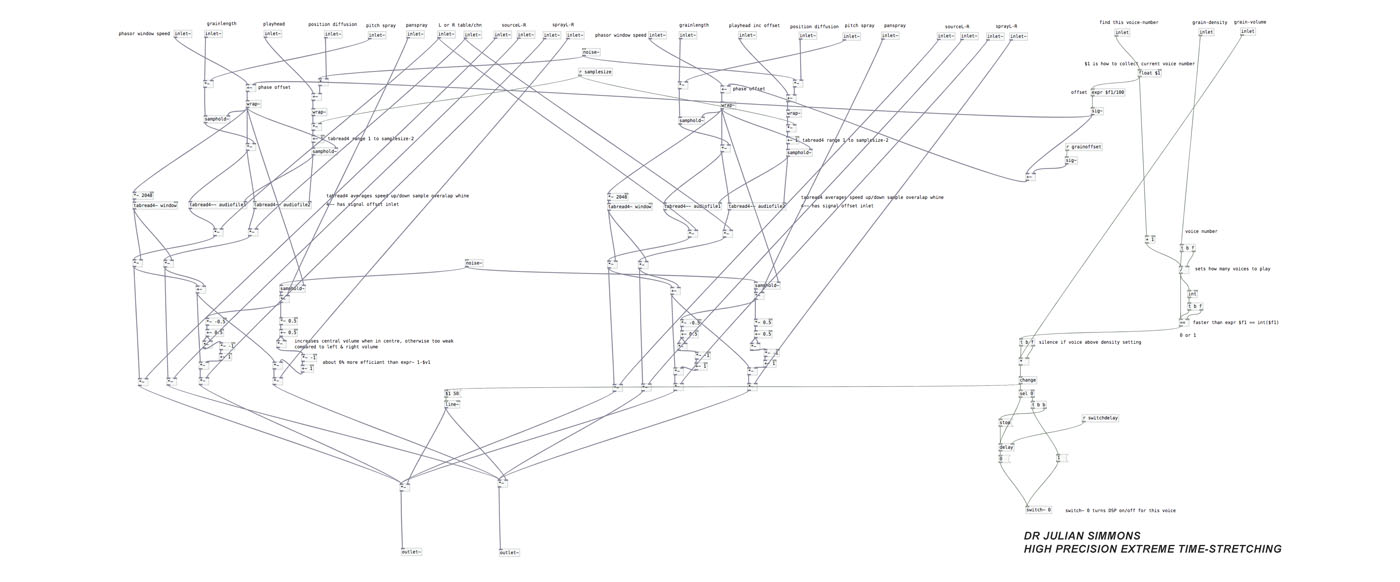

Behind the front-end interface many component calculations are maintained out of sight. Here the grain engine, which in fact is a group of four engines running in parallel (dual frequency across 2x channels), with up to 100 instances of this fourfold group processing audio simultaneously (400 grains @ 96kHz 32bit) :

WORLD-FIRST NATURAL HISTORY APPLICATION

An audio-visual synchronisation of the technique described above.

The sound of several Himalayan Balsam seed pods exploding, time-stretched by a factor between 70x and 160x slower – a lee-way to better fit the visuals. Due to keeping microphones out of shot while filming the sound recordings were captured separately to the Phantom video capture. For proof of concept, in the edit below I have arranged the video clips to tally as far a possible with my processing of the sound recordings.

21 wave files produced, presenting various processing angles on the seed-pod event, exemplifying the potential of this technique to unpack and highlight dynamic aspects of ultra-short transient events.

Original sound recordings by Chris Watson. Slo-mo’ video clips courtesy of the BBC Green Planet.

Material provided for research purposes only.

During the first 20 seconds, three source recordings at the original 1x playback rate (including the voice of Chris Watson) then follows the extreme stretched versions, culminating in a 1000x stretch.

ACTUAL SOUND OF HIMALAYAN BALSAM (not Foley effects)

USE HEADPHONES – time-stretched audio is spatially immersive :